- The RAL (register abstraction layer) provides accesses to DUT and also keeps a track of register content of DUT.

- UVM RAL can be used to automate the creation of high level, object oriented abstraction model of registers and memory in DUT.

- Register layer makes the register abstraction and access of its contents independent of the bus protocol which is used to transfer data in and out of registers inside the design.

- Hierarchical model provided by RAL makes the reusability of test bench components very easy.

- The changes in initial configuration of registers or specifications can be easily communicated in the entire environment. RAL layer supports both front door and backdoor access. The backdoor access does not use the bus interface rather it uses the HDL defined paths for direct communication with the device. Thus in zero simulation time the registers of device can be reconfigured using the backdoor access and verification can be started.

- One more advantage of backdoor access is that it can be used for verify if the access through front door are happening correctly. To achieve this the front door, write is verified using backdoor read.

Featured post

Top 5 books to refer for a VHDL beginner

VHDL (VHSIC-HDL, Very High-Speed Integrated Circuit Hardware Description Language) is a hardware description language used in electronic des...

Tuesday 10 November 2015

UVM Interview Questions - 5

Monday 5 October 2015

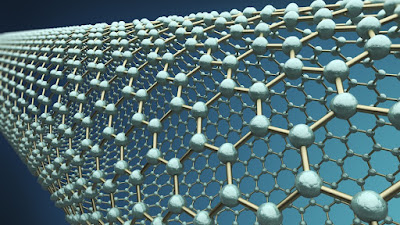

IBM steps forward to replace Silicon Transistors with Carbon Nanotubes

|

| Carbon Nanotube |

- Carbon nanotube transistors can operate at ten nanometers

- Equivalent to 10,000 times thinner than a strand of human hair

- Less than half the size of today’s leading silicon technology

- Could also mean wearables that attach directly to skin and internal organs

Sunday 4 October 2015

International Conference on VLSI Design and Embeded Systems - Jan 2016

Monday 21 September 2015

SVEditor - A SystemVerilog Editor Eclipse Plugin

Friday 11 September 2015

UVM Interview Questions - 4

Thursday 10 September 2015

UVM Interview Questions - 3

Wednesday 9 September 2015

UVM Interview Questions - 1

- create

- start_item

- randomize

- finish_item

- get_response (optional)

UVM Interview Questions - 2

Ans: UVM is a methodology based on SystemVerilog language and is not a language on its own. It is a standardized methodology that defines several best practices in verification to enable efficiency in terms of reuse and is also currently part of IEEE 1800.2 working group.

Q17: What are the benefits of using UVM?

Ans: Some of the benefits of using UVM are :

- Modularity and Reusability – The methodology is designed as modular components (Driver, Sequencer, Agents , env etc) which enables reusing components across unit level to multi-unit or chip level verification as well as across projects.

- Separating Tests from Testbenches – Tests in terms of stimulus/sequencers are kept separate from the actual testbench hierarchy and hence there can be reuse of stimulus across different units or across projects

- Simulator independent – The base class library and the methodology is supported by all simulators and hence there is no dependence on any specific simulator

- Better control on Stimulus generation – Sequence methodology gives good control on stimulus generation. There are several ways in which sequences can be developed which includes randomization, layered sequences, virtual sequences etc which provides a good control and rich stimulus generation capability.

- Easy configuration – Config mechanisms simplify configuration of objects with deep hierarchy. The configuration mechanism helps in easily configuring different testbench components based on which verification environment uses it and without worrying about how deep any component is in testbench hierarchy

- Factory mechanism – Factory mechanisms simplifies modification of components easily. Creating each components using factory enables them to be overridden in different tests or environments without changing underlying code base.

- Name of the phase task or function

- Top down or bottom up phase

- Task or function

<< PREVIOUS NEXT >>

UVM Interview Questions

- First methodology & second collection of class libraries for Automation

- Reusability through testbench

- Plug & Play of verification IPs

- Generic Testbench Development

- Vendor & Simulator Independent

- Smart Testbench i.e. generate legal stimulus as from pre-planned coverage plan

- Support CDV –Coverage Driven Verification

- Support CRV –Constraint Random Verification

- UVM standardized under the Accellera System Initiative

- Register modeling

- Quasi Static Entity (after build phase it is available throughout the simulation)

- Always tied to a given hardware(DUT Interface) Or a TLM port

- Having phasing mechanism for control the behavior of simulation

- Configuration Component Topology

- Dynamic Entity (create when needed, transfer from one component to other & then dereference)

- Not tied to a given hardware or any TLM port

- Not phasing mechanism

- List of UVM Phases:

- buid_phase

- connect_phase

- end_of_elaboration_phase

- start_of_simulation_phase

- run _phase (task)Sub Phases of Reset Phase:pre_reset_phasereset_phasepost_reset_phasepre_configure_phaseconfigure_phasepost_configure_phasepre_main_phasemain_phasepost_main_phasepre_shutdown_phaseshutdown_phasepost_shutdown_phase

- extract_phase

- check_phase

- report_phase

Wednesday 2 September 2015

Intel's Skylarke Processors for PCs, Tablets and Servers

Friday 21 August 2015

Resistive Memory - ReRam

The memory tech that will eventually replace NAND flash, finally in market

What is ReRam?

ReRam is Resistive random-access memory (RRAM or ReRAM) is a type of non-volatile (NV) random-access (RAM) computer memory that works by changing the resistance across a dielectric solid-state material often referred to as a memristor. The biggest advantage of ReRAM technology is its good compatibility with CMOS technologies.

It is under development by a number of companies, and some have already patented their own versions of the technology. The memory operates by changing the resistance of special dielectric material called a memresistor (memory resistor) whose resistance varies depending on the applied voltage.

What makes ReRam?

From the viewpoint of the material choice, the advantage of ReRAM is evident. It is possible to fabricate MOM structures easily by using the oxides widely used in the current semiconductor technologies. Low-current ReRAM operation was reported in the CuOx-based MOM structure. The CuOx layer was grown by the thermal oxidation of the 0.18-μm Cu. NiO and CoO are being intensively studied as oxide materials for ReRAM, and these transition metal elements are also used in metal silicides employed as gate materials. Recently, the good scaling feasibility of ReRAM was demonstrated in an HfOx-based memory with a cell size of 30 nm. The devices in a 1-kbit array exhibited a high device yield (~100%) and robust cycling endurance (>106) with a pulse width of 40 ns. The memory cell consisted of a TiN/Ti/HfOx/TiN structure. Here, the Ti overlayer played the role of oxygen gettering for better ReRAM operation. The gettering effect has already been investigated in HfOx as a high-k material for the gate dielectric films in CMOS devices. The academic and technological knowledge about high-k materials will be very useful in the design of the stacking structure for a ReRAM device.

How ReRam Works?

RRAM is the result of a new kind of dielectric material which is not permanently damaged and fails when dielectric breakdown occurs; for a memresistor, the dielectric breakdown is temporary and reversible. When voltage is deliberately applied to a memresistor, microscopic conductive paths called filaments are created in the material. The filaments are caused by phenomena like metal migration or even physical defects. Filaments can be broken and reversed by applying different external voltages. It is this creation and destruction of filaments in large quantities that allows for storage of digital data. Materials that have memresistor characteristics include oxides of titanium and nickel, some electrolytes, semiconductor materials, and even a few organic compounds have been tested to have these characteristics.

The principal advantage of RRAM over other non-volatile technology is high switching speed. Because of the thinness of the memresistors, it has a great potential for high storage density, greater read and write speeds, lower power usage, and cheaper cost than flash memory. Flash memory cannot continue to scale because of the limits of the materials, so RRAM will soon replace flash memory.

Monday 29 June 2015

Difference between simulation and emulation

Think of a flight simulator as an example. It looks and feels like you are flying an airplane, but you are completely disconnected from the reality of flying the plane, and you can bend or break those rules as you see fit. E.g.; Fly an Airbus A380 upside down between London and Sydney without breaking it.

An emulation is a system that behaves exactly like something else, and abides by all of the rules of the system being emulated. It’s like duplicating every aspect of the original device’s behaviour. It is effectively a complete replication of another system, right down to being binary compatible with the emulated system's inputs and outputs, but operating in a different environment to the environment of the original emulated system. The rules are fixed, and cannot be changed or the system fails.

Today hardware emulation has become an very popular tool for verification because of following reasons:

In the past few years, the emulation user community has expanded exponentially by the addition of software developers to the traditional base of hardware designers and verification engineers.

Also, uses of hardware emulation have multiplied because of its versatility as a resource for debugging both the hardware and software of complex system-on-chip (SoC) designs. Hardware emulation is the only verification tool that can be deployed in more than one mode. In fact, it can be used in four main modes, some of which can be combined for added versatility. Because of this resourcefulness, hardware emulation can be used to achieve several verification objectives.

Following are the deployment modes for hardware emulator. These are characterized by type of stimulus applied to DUT:

- In Circuit Emulation (ICE) : This was considered to be the traditional method when hardware emulation was deployed. In this case, the DUT is mapped inside the emulator and connected in in-circuit emulation (ICE) mode to the target system in place of a chip or processor for debug prior to silicon availability.

- Transaction Based Acceleration (TBX) : Transaction-based emulation moves verification up a level of abstraction from the register transfer level (RTL), improving performance and debug productivity. It’s gaining popularity over the ICE mode because the physical target system is replaced by a virtual target system using a hardware verification language (HVL) such as

SystemVerilog, SystemC, or C++. - Simulation Testbench Acceleration : In this mode, an RTL testbench drives the DUT in the emulator via a programmable logic interface (PLI). In general, this is the slowest performance mode, but it has some advantages, such as the fact that it does not require changes to the testbench.

- Embeded Software Acceleration : In this mode, the software code is executed on the DUT processor mapped inside the emulator. This is the fastest performance mode, making it the choice for processing billions of verification cycles necessary to boot an operating system.

It is possible to mix some of the above modes, such as processing embedded software together with a virtual testbench driving the DUT via verification IP or even in ICE mode.

Wednesday 13 May 2015

IEEE Standards

More specifically, the IEEE 802 standards are restricted to networks carrying variable-size packets. By contrast, in cell relay networks data is transmitted in short, uniformly sized units called cells. Isochronous networks, where data is transmitted as a steady stream of octets, or groups of octets, at regular time intervals, are also out of the scope of this standard. The number 802 was simply the next free number IEEE could assign, though “802” is sometimes associated with the date the first meeting was held — February 1980.

The services and protocols specified in IEEE 802 map to the lower two layers (Data Link and Physical) of the seven-layer OSI networking reference model. In fact, IEEE 802 splits the OSI Data Link Layer into two sub-layers named Logical Link Control (LLC) and Media Access Control (MAC), so that the layers can be listed like this:

- Data link layer

- LLC Sublayer

- MAC Sublayer

- Physical layer

The IEEE 802 family of standards is maintained by the IEEE 802 LAN/MAN Standards Committee (LMSC). The most widely used standards are for the Ethernet family, Token Ring, Wireless LAN, Bridging and Virtual Bridged LANs. An individual Working Group provides the focus for each area.

IEEE developed a set of 802 network standards. They include:

- IEEE 802.1: Standards related to network management.

- IEEE 802.2: General standard for the data link layer in the OSI Reference Model. The IEEE divides this layer into two sub-layers -- the logical link control (LLC) layer and the media access control (MAC) layer. The MAC layer varies for different network types and is defined by standards IEEE 802.3 through IEEE 802.5.

- IEEE 802.3: Defines the MAC layer for bus networks that use CSMA/CD. This is the basis of the Ethernet standard.

- IEEE 802.4: Defines the MAC layer for bus networks that use a token-passing mechanism (token bus networks).

- IEEE 802.5: Defines the MAC layer for token-ring networks.

- IEEE 802.6: Standard for Metropolitan Area Networks (MANs).

Tuesday 12 May 2015

Ethernet–Introduction

Computer networking technologies are the glue that binds these elements together. Networking allows one computer to send information to and receive information from another. We can classify network technologies as belonging to one of two basic groups. Local area network (LAN) technologies connect many devices that are relatively close to each other, usually in the same building. The library terminals that display book information would connect over a local area network. Wide area network (WAN) technologies connect a smaller number of devices that can be many kilometers apart.

In comparison to WANs, LANs are faster and more reliable, but improvements in technology continue to blur the line of demarcation. Fiber optic cables have allowed LAN

technologies to connect devices tens of kilometers apart, while at the same time greatly

improving the speed and reliability of WANs.

Read More >>

Ethernet

The Ethernet standard has grown to encompass new technologies as computer networking has matured. Specified in a standard, IEEE 802.3, an Ethernet LAN typically uses coaxial cable or special grades of twisted pair wires. Ethernet is also used in wireless LANs. Ethernet uses the CSMA/CD access method to handle simultaneous demands. The most commonly installed Ethernet systems are called 10BASE-T and provide transmission speeds up to 10 Mbps. Devices are connected to the cable and compete for access using a Carrier Sense Multiple Access with Collision Detection (CSMA/CD) protocol. Fast Ethernet or 100BASE-T provides transmission speeds up to 100 megabits per second and is typically used for LAN backbone systems, supporting workstations with 10BASE-T cards. Gigabit Ethernet provides an even higher level of backbone support at 1000 megabits per second (1 gigabit or 1 billion bits per second). 10-Gigabit Ethernet provides up to 10 billion bits per second.

The Ethernet standard has grown to encompass new technologies as computer networking has matured. Specified in a standard, IEEE 802.3, an Ethernet LAN typically uses coaxial cable or special grades of twisted pair wires. Ethernet is also used in wireless LANs. Ethernet uses the CSMA/CD access method to handle simultaneous demands. The most commonly installed Ethernet systems are called 10BASE-T and provide transmission speeds up to 10 Mbps. Devices are connected to the cable and compete for access using a Carrier Sense Multiple Access with Collision Detection (CSMA/CD) protocol. Fast Ethernet or 100BASE-T provides transmission speeds up to 100 megabits per second and is typically used for LAN backbone systems, supporting workstations with 10BASE-T cards. Gigabit Ethernet provides an even higher level of backbone support at 1000 megabits per second (1 gigabit or 1 billion bits per second). 10-Gigabit Ethernet provides up to 10 billion bits per second.

Sunday 15 March 2015

A Cache Memory

where,

Hit_counts and Miss_counts are the hit and miss probabilities.

|

| Fully associative cache |

|

| Direct mapped Cache |

2. 2 way set associative cache - In this type of cache we have two group of lines,each containing 64 lines.The cache has the same number of fields as direct mapped cache but tag has 21 bits and index has 6 bits here.

2. 4 way set associative cache - Here we have 4 groups each contains 32 lines.index has 5 bits and tag has 22 bits.

|

| 2-way and 4-way set associative caches |

-

Very often we come across questions from VLSI engineers that "Which scripting language should a VLSI engineer should learn?". Well...

-

In Verilog certain type of assignments or expression are scheduled for execution at the same time and order of their execution is not guara...

-

This post will help you to understand the difference between real, realtime and shortreal data types of SystemVerilog and its usage. ...

-

VHDL (VHSIC-HDL, Very High-Speed Integrated Circuit Hardware Description Language) is a hardware description language used in electronic des...

-

Formal Definition The Value change dump (VCD) file contains information about any value changes on the selected variables. Simplified Synt...