Description of the Project:-

This paper describes the hardware design flow of lifting based 2-D Forward Discrete Wavelet Transform (FDWT) processor for JPEG 2000. In order to build high quality image of JPEG 2000 codec, an effective 2-D FDWT algorithm has been performed on input image file to get the decomposed image coefficients. The Lifting Scheme reduces the number of operations execution steps to almost one-half of those needed with a conventional convolution approach. Initially, the lifting based 2-D FDWT algorithm has been developed using Mat lab. The FDWT modules were simulated using XPS(8.1i) design tools. The final design was verified with Matlab image processing tools.

Comparison of simulation results Matlab was done to verify the proper functionality of the developed module. The motivation in designing the hardware modules of the FDWT was to reduce its complexity, enhance its performance and to make it suitable development on a reconfigurable FPGA based platform for VLSI implementation. Results of the decomposition for test image validate the design. The entire system runs at 215 MHz clock frequency and reaches a speed performance suitable for several realtime applications. The result of simulation displays that lifting scheme needs less memory requirement.

Introduction :

A majority of today’s Internet bandwidth is estimated to be used for images and video. Recent multimedia applications for handheld and portable devices place a limit on the available wireless bandwidth. The bandwidth is limited even with new connection standards. JPEG image compression that is in widespread use today took several years for it to be perfected. Wavelet based techniques such as JPEG2000 for image compression has a lot more to offer than conventional methods in terms of compression ratio. Currently wavelet implementations are still under development lifecycle and are being perfected. Flexible energy-efficient hardware implementations that can handle multimedia functions such as image processing, coding and decoding are critical, especially in hand-held portable multimedia wireless devices.

Background

Data compression is, of course, a powerful, enabling technology that plays a vital role in the information age. Among the various types of data commonly transferred over networks, image and video data comprises the bulk of the bit traffic. For example, current estimates indicate that image data take up over 40% of the volume on the Internet. The explosive growth in demand for image and video data, coupled with delivery bottlenecks has kept compression technology at a premium.

Among the several compression standards available, the JPEG image compression standard is in wide spread use today. JPEG uses the Discrete Cosine Transform (DCT) as the transform, applied to 8-by-8 blocks of image data. The newer standard JPEG2000 is based on the Wavelet Transform (WT). Wavelet Transform offers multi-resolution image analysis, which appears to be well matched to the low level characteristic of human vision. The DCT is essentially unique but WT has many possible realizations. Wavelets provide us with a basis more suitable for representing images.

This is because it cans represent information at a variety of scales, with local contrast changes, as well as larger scale structures and thus is a better fit for image data.

Aim of the project

The main aim of the project is to implement and verify the image compression technique and to investigate the possibility of hardware acceleration of DWT for signal processing applications. A hardware design has to be provided to achieve high performance, in comparison to the software implementation of DWT. The goal of the project is to

. Implement this in a Hardware description language (Here VHDL).

. Perform simulation using tools such as Xilinx ISE 8.1i.

. Check the correctness and to synthesize for a Spartan 3E FPGA Kit.

The STFT represents a sort of compromise between the time- and frequency-based views of a signal. It provides some information about both when and at what frequencies a signal event occurs. However, you can only obtain this information with limited precision, and that precision is determined by the size of the window.

While the STFT compromise between time and frequency information can be useful, the drawback is that once you choose a particular size for the time window, that window is the same for all frequencies. Many signals require a more flexible approach—one where we can vary the window size to determine more accurately either time or frequency.

Problem Present in Fourier Transform : The Fundamental idea behind wavelets is to analyze according to scale. Indeed, some researchers feel that using wavelets means adopting a whole new mind-set or perspective in processing data. Wavelets are functions that satisfy certain mathematical requirements and are used in representing data or other functions. This idea is not new. Approximation using superposition of functions has existed since the early 18OOs, when Joseph Fourier discovered that he could superpose sines and cosines to represent other functions.

However, in wavelet analysis, the scale used to look at data plays a special role. Wavelet algorithms process data at different scales or resolutions. Looking at a signal (or a function) through a large “window,” gross features could be noticed. Similarly, looking at a signal through a small “window,” small features could be noticed. The result in wavelet analysis is to see both the forest and the trees, so to speak.

This makes wavelets interesting and useful. For many decades scientists have wanted more appropriate functions than the sines and cosines, which are the basis of Fourier analysis, to approximate choppy signals.’ By their definition, these functions are non-local (and stretch out to infinity). They therefore do a very poor job in approximating sharp spikes. But with wavelet analysis, we can use approximating functions that are contained neatly in finite domains. Wavelets are well-suited for approximating data with sharp discontinuities.

The wavelet analysis procedure is to adopt a wavelet prototype function, called an analyzing wavelet or mother wavelet. Temporal analysis is performed with a contracted, high-frequency version of the prototype wavelet, while frequency analysis is performed with a dilated, low-frequency version of the same wavelet. Because the original signal or function can be represented in terms of a wavelet expansion (using coefficients in a linear combination of the wavelet functions), data operations can be performed using just the corresponding wavelet coefficients.

And if wavelets best adapted to data are selected, the coefficients below a threshold is truncated, resultant data are sparsely represented. This sparse coding makes wavelets an excellent tool in the field of data compression. Other applied fields that are using wavelets include astronomy, acoustics, nuclear engineering, sub-band coding, signal and image processing, neurophysiology, music, magnetic resonance imaging, speech discrimination, optics, fractals, turbulence, earthquake prediction, radar, human vision, and pure mathematics applications such as solving partial differential equations.

Basically wavelet transform (WT) is used to analyze non-stationary signals, i.e., signals whose frequency response varies in time, as Fourier transform (FT) is not suitable for such signals. To overcome the limitation of FT, short time Fourier transform (STFT) was proposed. There is only a minor difference between STFT and FT. In STFT, the signal is divided into small segments, where these segments (portions) of the signal can be assumed to be stationary. For this purpose, a window function "w" is chosen. The width of this window in time must be equal to the segment of the signal where its still be considered stationary. By STFT, one can get time-frequency response of a signal simultaneously, which can’t be obtained by FT.

Scaling

We’ve seen the interrelation of wavelets and quadrature mirror filters. The wavelet function is determined by the high pass filter, which also produces the details of the wavelet decomposition.

There is an additional function associated with some, but not all wavelets. This is the so-called scaling function. The scaling function is very similar to the wavelet function. It is determined by the low pass quadrature mirror that iteratively up- sampling and convolving the high pass filter produces a shape approximating the wavelet function, iteratively up-sampling and convolving the low pass filter produces a shape approximating the scaling function.We’ve already alluded to the fact that wavelet analysis produces a time-scale view of a signal and now we’re talking about scaling and shifting wavelets.

What exactly do we mean by scale in this context?

Scaling a wavelet simply means stretching (or compressing) it. To go beyond colloquial descriptions such as “stretching,” we introduce the scale factor, often denoted by the letter a.

If we’re talking about sinusoids, for example the effect of the scale factor is very easy to see:

One-Stage Decomposition

For many signals, the low-frequency content is the most important part. It is what gives the signal its identity. The high-frequency content on the other hand imparts flavor or nuance. Consider the human voice. If you remove the high-frequency components, the voice sounds different but you can still tell what’s being said. However, if you remove enough of the low-frequency components, you hear gibberish. In wavelet analysis, we often speak of approximations and details. The approximations are the high-scale, low-frequency components of the signal. The details are the low-scale, high-frequency components. The filtering process at its most basic level looks like this:

The original signal S passes through two complementary filters and emerges as two signals. Unfortunately, if we actually perform this operation on a real digital signal, we wind up with twice as much data as we started with. Suppose, for instance that the original signal S consists of 1000 samples of data. Then the resulting signals will each have 1000 samples, for a total of 2000. These signals A and D are interesting, but we get 2000 values instead of the 1000 we had. There exists a more subtle way to perform the decomposition using wavelets.

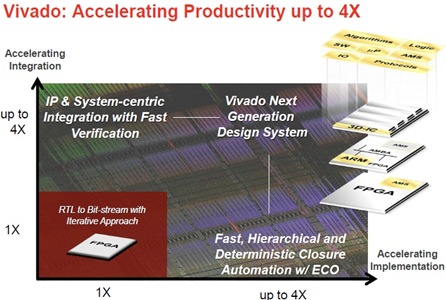

There are system integration bottlenecks, such as design and IP re-use, integrating algorithmic and RTL level IP, mixing DSP, embedded, connectivity and logic, and verification of blocks and “systems”.

There are system integration bottlenecks, such as design and IP re-use, integrating algorithmic and RTL level IP, mixing DSP, embedded, connectivity and logic, and verification of blocks and “systems”.